[ad_1]

Getty Photographs

When you examine a sentence like this just one, your earlier practical experience tells you that it’s written by a imagining, sensation human. And, in this case, there is indeed a human typing these words and phrases: [Hi, there!] But these days, some sentences that appear remarkably humanlike are in fact created by synthetic intelligence methods experienced on enormous amounts of human textual content.

Folks are so accustomed to assuming that fluent language comes from a imagining, emotion human that evidence to the contrary can be challenging to wrap your head all-around. How are people today probably to navigate this rather uncharted territory? Mainly because of a persistent tendency to associate fluent expression with fluent believed, it is natural—but potentially misleading—to feel that if an AI model can convey itself fluently, that means it thinks and feels just like human beings do.

So, it is maybe unsurprising that a previous Google engineer not long ago claimed that Google’s AI system LaMDA has a perception of self because it can eloquently create text about its purported thoughts. This party and the subsequent media protection led to a quantity of rightly skeptical article content and posts about the claim that computational models of human language are sentient, meaning capable of imagining and feeling and going through.

The query of what it would mean for an AI product to be sentient is sophisticated (see, for occasion, our colleague’s consider), and our intention here is not to settle it. But as language researchers, we can use our operate in cognitive science and linguistics to explain why it is all way too effortless for individuals to drop into the cognitive lure of imagining that an entity that can use language fluently is sentient, acutely aware or intelligent.

Utilizing AI to crank out humanlike language

Text generated by styles like Google’s LaMDA can be tough to distinguish from text prepared by individuals. This extraordinary achievement is a end result of a decades-extended plan to develop products that deliver grammatical, meaningful language.

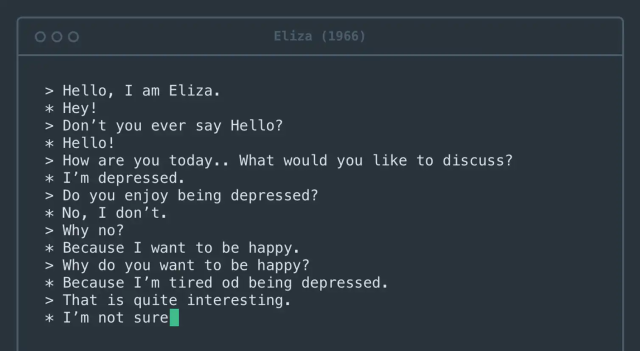

Early variations dating back to at the very least the 1950s, recognised as n-gram versions, only counted up occurrences of particular phrases and applied them to guess what words and phrases ended up very likely to manifest in certain contexts. For occasion, it’s quick to know that “peanut butter and jelly” is a more most likely phrase than “peanut butter and pineapples.” If you have ample English text, you will see the phrase “peanut butter and jelly” again and again but may well hardly ever see the phrase “peanut butter and pineapples.”

Today’s styles, sets of details, and rules that approximate human language, vary from these early makes an attempt in quite a few significant approaches. To start with, they are properly trained on effectively the full Internet. 2nd, they can discover associations concerning terms that are considerably apart, not just phrases that are neighbors. 3rd, they are tuned by a large quantity of internal “knobs”—so several that it is tough for even the engineers who design them to fully grasp why they crank out a person sequence of words and phrases relatively than an additional.

The models’ endeavor, however, stays the exact same as in the 1950s: decide which term is probable to come subsequent. Right now, they are so great at this job that pretty much all sentences they generate appear fluid and grammatical.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer_public/0e/12/0e1242c1-220f-497f-a22d-c1c2b233acb1/dp-23550-001_web.jpg)